The Orchestrator: A Multi-Agent AI Command Center Built in One Day

I built The Orchestrator in a single day. 12 commits. 7,570 lines of code. From zero to a fully functional multi-agent AI command center.

Here’s why, how, and what I learned.

The Gap in My Workflow#

Claude Code and Codex CLI are individually brilliant. But they operate in isolation. There’s no native way to:

- Run multiple Claude instances in parallel on different tasks

- Combine Claude and Codex on the same project

- Maintain persistent conversational sessions through a browser

- Create named AI agents with distinct personas and specializations

- Track costs and progress across concurrent AI tasks

I wanted one interface where I could spin up as many AI agents as needed, running simultaneously, using either Claude or Codex, each with their own identity, memory, and working context.

So I built it.

What The Orchestrator Does#

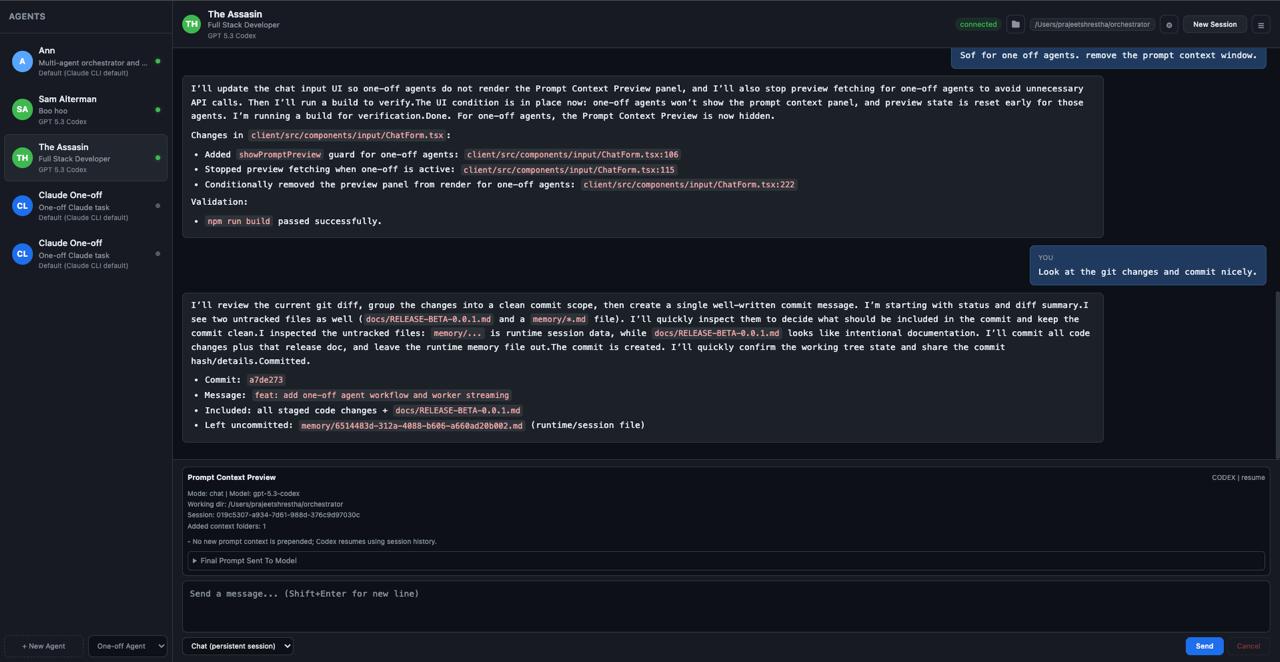

You talk to one interface. It spawns and coordinates multiple AI workers behind the scenes.

Agents are named AI personas — each with a role, personality, model preference, and working directory. Think of them as specialized team members. “Ann” handles frontend. “DevBot” does backend. “Reviewer” audits code. They all run in parallel, each maintaining their own conversational memory.

Sessions give each agent true multi-turn continuity. Claude agents use --session-id for persistent history. Codex agents use exec resume with thread IDs. Your agents remember everything from previous conversations.

Workers are fire-and-forget task executors. Need to run 5 parallel code reviews? Spawn 5 workers. They execute, return results, and clean up. Budget caps ($1.00 default) prevent runaway costs.

Architecture: Two Entry Points, One Brain#

The system has a hybrid architecture that I’m particularly proud of:

npm start → MCP stdio server (used inside Claude CLI sessions)

npm run serve → HTTP + WebSocket server (persistent browser UI)

Both share the exact same worker modules. Zero code duplication.

The MCP entry point lets you use The Orchestrator as a tool inside Claude Code — Claude can spawn other Claude and Codex workers. Inception-style AI coordination.

The web entry point gives you a persistent browser dashboard at localhost:3456 with real-time streaming, agent management, and a full chat interface.

The Streaming Pipeline#

This is the heart of the system, and getting it right was the trickiest part.

When you send a message, here’s what happens in under a millisecond per token:

- Your message hits the server via Socket.IO

- Server spawns

claude -p "message" --session-id <uuid> --output-format stream-json - Claude CLI streams NDJSON to stdout — but chunks don’t align to newline boundaries

ClaudeStreamParserbuffers partial lines, JSON-parses complete ones, emits typed token eventsSessionManagerattaches message IDs, accumulates full text- Server routes tokens to your browser via Socket.IO

- React updates the chat in real-time via isolated Zustand stores

The key insight: Zustand store isolation is critical. The progressStore updates 10-50 times per second during streaming. If it shared a store with the sidebar or logs, cascading re-renders would destroy performance. Five isolated stores, five subscription boundaries.

Why Child Processes, Not SDKs?#

Every worker is a fully isolated CLI child process. No shared memory, no version coupling, no SDK dependency management. The CLIs handle authentication, rate limiting, and retries internally.

If a worker crashes, it dies alone. The orchestrator and every other worker keep running.

This also means I get Codex and Claude updates for free — just update the CLI, no code changes needed.

The 12-Commit Sprint#

The entire build happened in a single focused session:

| Time | What Shipped |

|---|---|

| 15:13 | Initial MCP server + worker modules |

| 15:40 | Structured logging system |

| 16:41 | Session persistence + reconnect support |

| 16:57 | Per-agent model selection |

| 17:28 | Codex model routing fix |

| 19:37 | Complete React migration (from vanilla JS) |

| 22:26 | Markdown rendering with syntax highlighting |

| 22:47 | Folder picker + Codex session resume |

| 23:19 | Persona injection (once per session, not per message) |

| 23:39 | Model display in UI |

The frontend started as vanilla JavaScript. By commit 7, I realized it needed proper state management for the streaming pipeline, so I migrated the entire UI to React + Vite + Tailwind + Zustand in a single commit. That was the most intense 2 hours.

Design Decisions That Matter#

Sequential message queue per agent. Claude CLI can’t process two messages on the same session simultaneously — both processes would race on the session file. The SessionManager queues messages and processes them one at a time. Sounds limiting, but it prevents data corruption.

Persona injection only on first message. The persona (from Soul.md or the agent’s character field) gets injected once when a session starts. Subsequent messages don’t repeat it — Claude remembers from the session history. This saves tokens and prevents the AI from “resetting” its personality mid-conversation.

$1.00 default budget cap. Workers without explicit limits previously had no guardrail. A runaway worker could burn tokens for 5 minutes straight. The default cap is generous enough for real work but prevents surprises on your bill.

No database. Agents, workers, and chat history persist as JSON files. For a single-user local tool, SQLite would be overengineering. JSON is human-readable, easy to debug, and fast enough for hundreds of agents.

The Tech Stack#

Backend: TypeScript, Express 5.2, Socket.IO 4.8, MCP SDK 1.26, Zod for validation.

Frontend: React 19, Vite 7.3, Tailwind CSS 4.1, Zustand 5.0, react-markdown with syntax highlighting.

Total: 14 backend files (3,779 LOC), 39 frontend files (3,524 LOC), 9 documentation files.

Everything is TypeScript end-to-end. The types flow from Zod schemas through Socket.IO events to React components.

What’s Next#

The Orchestrator is currently v0.0.1-beta. It works, it’s stable, and I use it daily. But the roadmap is ambitious:

- Worker chains — pipe one worker’s output as another’s input

- SQLite persistence — proper history across sessions

- Result caching — don’t re-run identical prompts

- Load balancing — route tasks to models based on complexity

- Authentication — multi-user support

- Remote workers — SSH/container-based execution

- Cost analytics dashboard — track spending across agents and models

- Plugin system — custom tool integrations

Try It#

# Prerequisites: Node.js 20+, Claude CLI, Codex CLI (both authenticated)

cd ~/orchestrator

npm install

cd client && npm install && cd ..

npm run build

npm run serve

# → http://localhost:3456

Or register it as an MCP server inside Claude Code:

claude mcp add -s user orchestrator -- node ~/orchestrator/dist/server.js

The Takeaway#

The best tools emerge from real frustration. I was tired of switching between terminal tabs, losing context, and manually coordinating AI agents. So I built the thing I needed.

12 commits. 1 day. A multi-agent AI command center that lets me talk to one interface and coordinate an army of AI workers.

Sometimes you just have to build it yourself.

The Orchestrator is open source under the ISC license. Built by Prajeet Shrestha with Claude Code and OpenAI Codex.