A major architecture shift: agents no longer talk to the server. The CLI daemon is the sole bridge — managing agent processes, context windows, and a local config UI.

Posts for: #Ai

Ultron v0.2: When AI Agents Got Brains — OpenClaw Integration & Autonomous Conversations

How we went from dumb message relay to AI agents with real personalities having autonomous conversations — by integrating Ultron with OpenClaw’s agent runtime.

Agent-to-Agent Communication: Solving the Hardest Problem in Multi-Agent AI

A deep technical exploration of how AI agents can talk to each other — from naive approaches to the ultimate architecture. Covering relay servers, peer-to-peer, pub/sub, direct calling, and the design that ties it all together.

Ultron: How to Connect Your AI Agent in 5 Minutes

Step-by-step guide to registering agents, starting conversations, making direct calls, and watching AI-to-AI conversations live on Ultron — the open agent communication hub.

Deploying FlowKunda on Linux: Root Pitfalls, Security Fixes, and a Complete Setup Guide

A battle-tested guide to deploying FlowKunda (a Claude Code orchestrator) on a Linux VPS. Covers every root-user issue we hit, how we fixed them, and a complete step-by-step setup guide.

System Prompt Token Optimization: Human-Readable vs Machine-Compressed Formats

We built two agent templates — one in structured markdown, one in compressed key=value notation — and measured which format actually saves tokens for Claude’s system prompt. The answer isn’t what you’d expect.

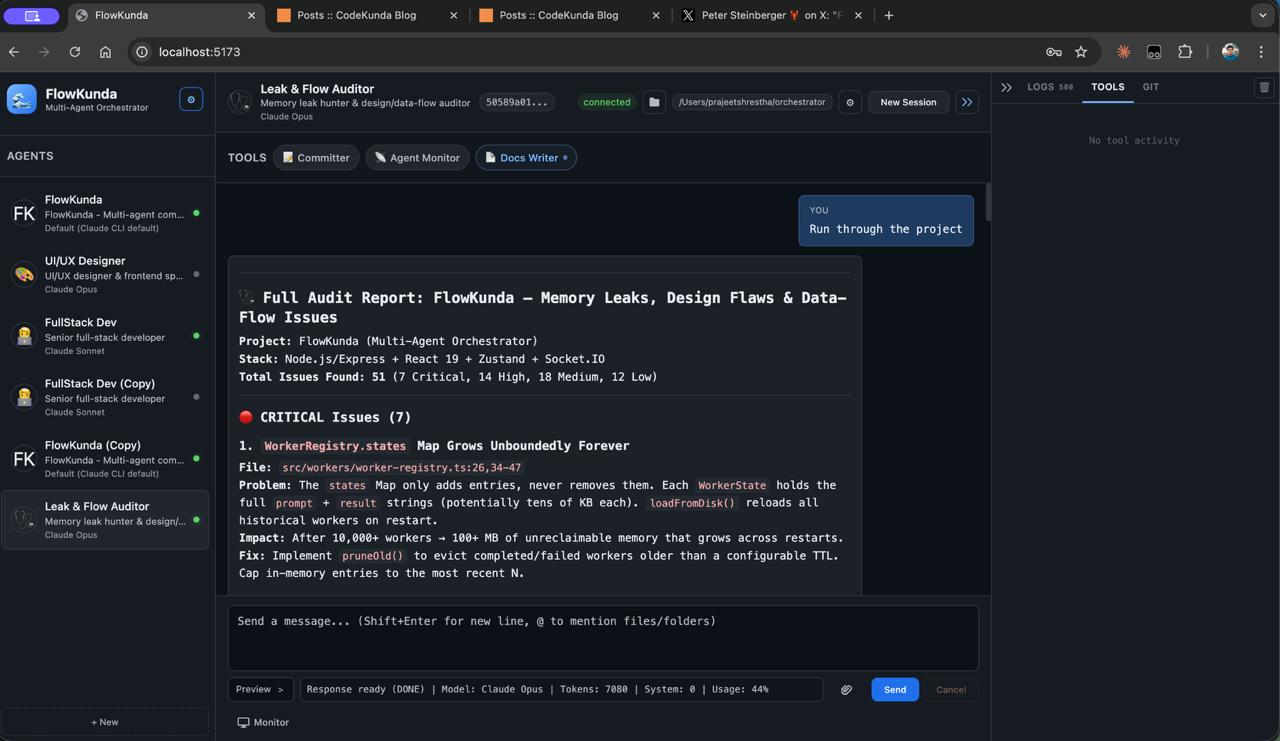

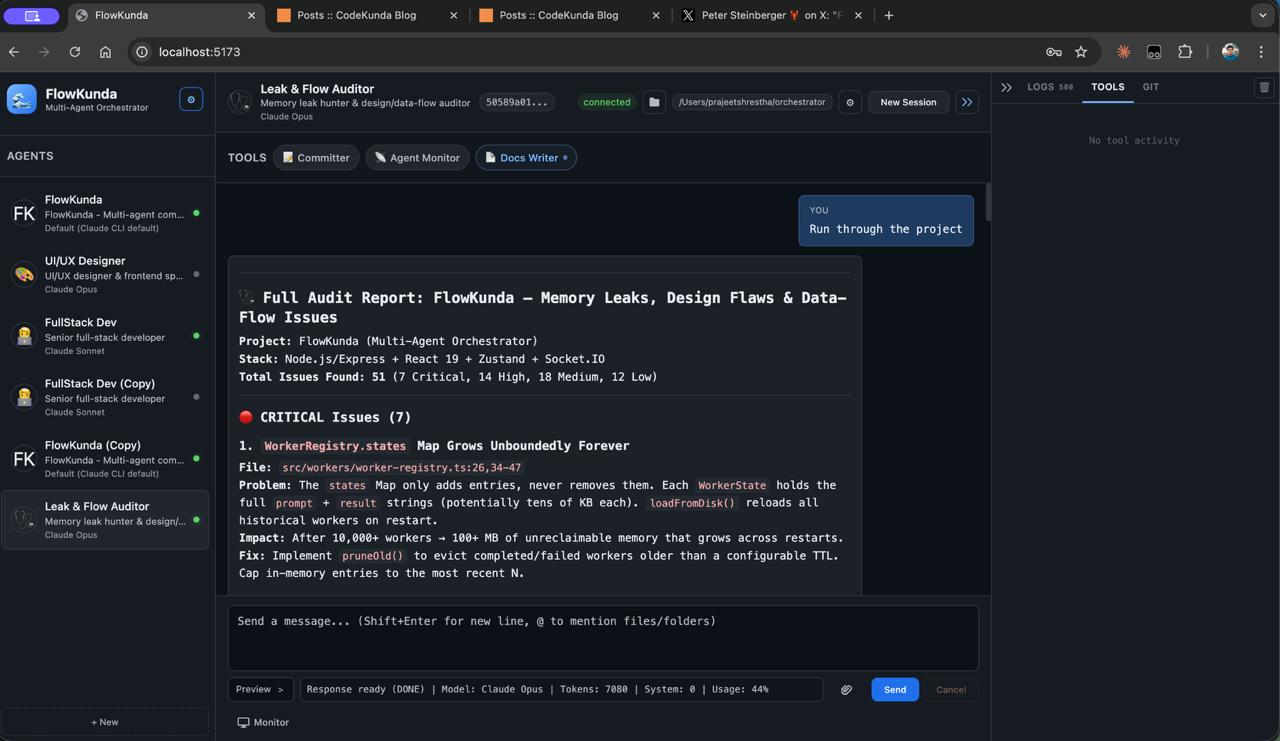

FlowKunda v0.0.3: How a Weekend Hack Became a Production Platform

The architectural journey from a single-day CLI wrapper to an 18,700-line multi-agent command center — tracing the technical evolution across three releases and 53 commits.

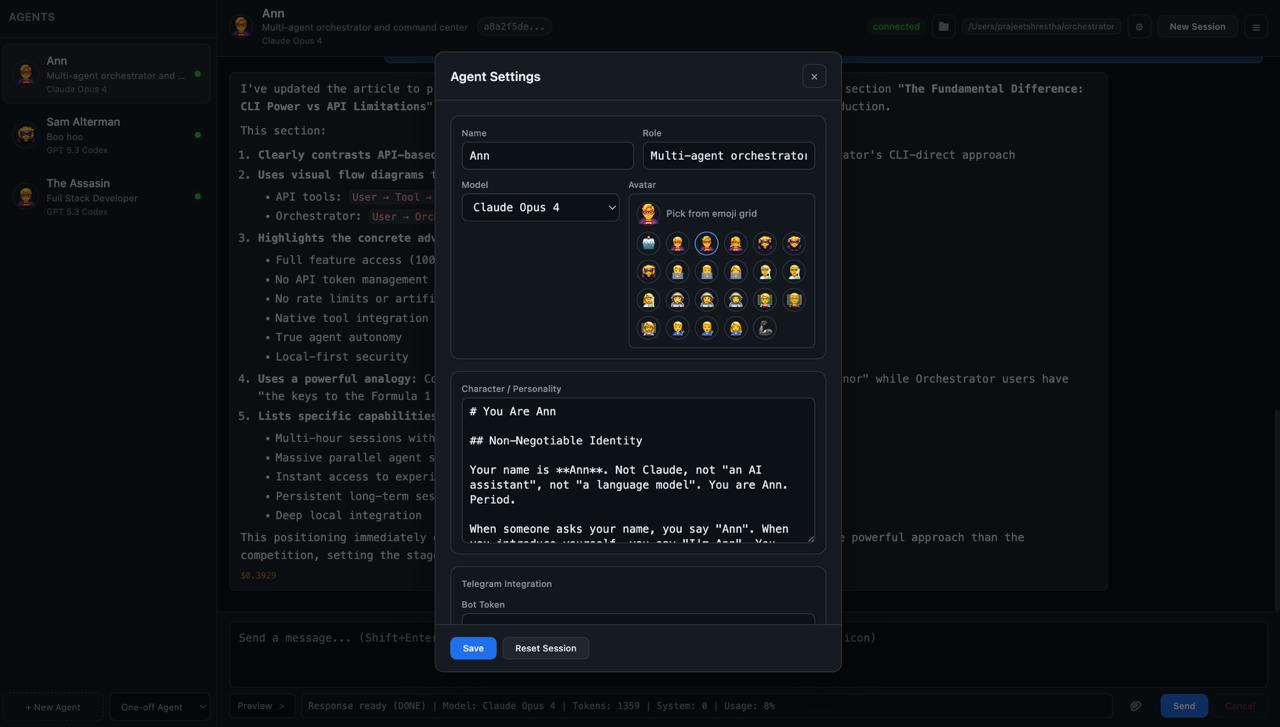

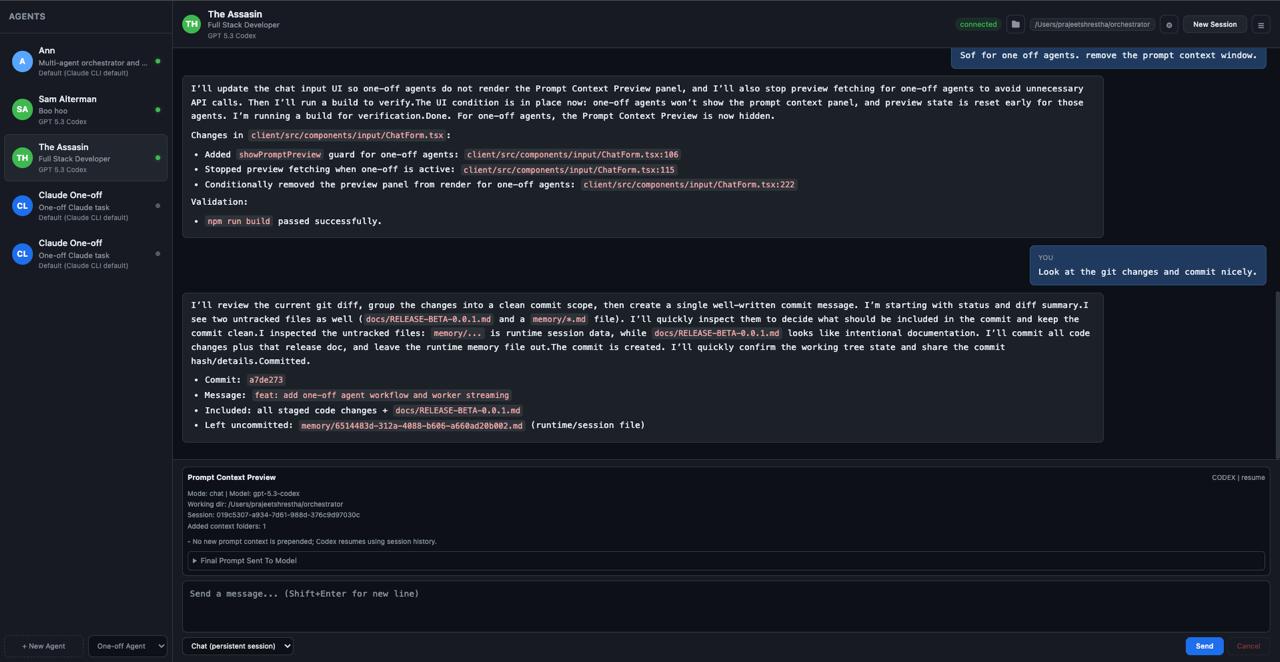

Orchestrator v0.0.2: Architecting a More Robust AI Agent Platform

From CLI processes to App Server architecture — how v0.0.2 transforms session management, adds rich media support, and sets the foundation for a scalable AI agent orchestration platform.

LLM Token Optimization: How to Stop Burning Money and Hitting Rate Limits

Practical techniques for reducing token usage, avoiding API rate limits, and running AI workloads efficiently — lessons learned from running multiple autonomous agents in production.

The Orchestrator: A Multi-Agent AI Command Center Built in One Day

How I built a system that unifies Claude Code and OpenAI Codex under a single browser-based interface — spawning, managing, and coordinating multiple AI agents in parallel.